What Happens When AI Answers Your Business Calls?

AI Voice Agent Integration Roadmap

Phase 1: Architecture CompletePending Integration Study

Pre-Implementation PhaseChain of Thought: Development Journey

AI System Analysis

Processing...-

1

We need to build an AI Agent for a realtor

-

2

We need to define the technical implementation

-

3

We can probably use Bland AI for this as voice AI platform

-

4

We need to build the ADK agents to connect to Bland AI

-

5

We may not need Bland AI for this—a realization gained after building the ADK agents

-

6

Let's connect the agents to a Twilio number so that we can call them directly

-

7

Lets go deeper into the concepts behind ADK live voice agents

-

8

We don't need a third-party voice platform at all—Gemini Live API can connect directly to Twilio

-

~

Analyzing next optimal path

Live Voice Interface Demo

Here’s what the actual voice interface will look like when deployed. This clean, Apple-inspired design focuses on simplicity—just tap the voice button to start a conversation with the AI agent system.

Try calling: 000-000-0000 to experience the AI voice agent system directly. The interface is intentionally minimal—when someone calls your business number, they’ll interact with this same conversational AI through their phone, while the sophisticated multi-agent system works behind the scenes to qualify leads, search properties, and coordinate follow-up actions.

The Core Idea

What if your business phone could think? Not just route calls or play hold music, but actually understand what callers want, ask intelligent follow-up questions, and make decisions about how to handle each lead? That’s what I’ve been building—a multi-agent AI system that turns every incoming call into a qualified business opportunity.

The architecture is surprisingly elegant: when someone calls, Gemini Live API handles the natural conversation through Twilio while three specialized agents work behind the scenes. An Orchestrator manages the flow, a Realtor Agent analyzes property requirements and market data, and a Secretary Agent handles scheduling and follow-up coordination. They communicate through shared session state, each contributing their expertise to build a complete picture of the lead.

The result? Every caller gets personalized attention, every lead gets properly qualified, and every opportunity gets routed to the right person with the right priority level. All running 24/7 with direct integration between Google’s voice AI and telephony infrastructure.

The System Architecture

I’ve built two interactive demonstrations that show how this works in practice. The first focuses on the business model and cost structure, while the second dives deep into the technical architecture.

Data Privacy & Security

Flexible Geography & ComplianceBusiness Model & Cost Analysis

This first demo shows the complete business case—from operational costs to ROI scenarios. You can adjust parameters like call volume and qualification rates to see how the economics work for different business sizes.

Key Insights from the Business Model:

- Cost per qualified lead: 45-85 for human agents)

- Break-even point: Just 1-2 qualified leads per month

- 24/7 operation: Never misses a call, consistent qualification process

- Scalable economics: Costs grow linearly, revenue grows exponentially

Technical Architecture Deep Dive

This second demo explores the technical implementation—how the multi-agent system actually works, the Google ADK framework integration, and the infrastructure choices that make it reliable.

Technical Highlights:

- Gemini Live API: Direct voice conversation handling with natural language understanding

- Twilio integration: Reliable telephony infrastructure for call routing and management

- Google ADK framework: Native multi-agent orchestration with Python

- Cloud Run deployment: Serverless scaling with 60-minute execution limits

- Firestore state management: Real-time agent coordination and data persistence

System Flow Overview

Here’s how the entire system works together, from the moment someone calls to when they become a qualified lead:

The system flow demonstrates how Gemini Live API connects directly to Twilio for voice handling, eliminating the need for third-party conversation platforms. When a call comes in through Twilio, Gemini Live API processes the natural language conversation while the multi-agent system coordinates the business logic in real-time. This direct integration simplifies the architecture significantly while maintaining sophisticated conversation capabilities.

The beauty of this architecture is that all three agents work simultaneously during each call. While the caller is having a natural conversation through Gemini Live API, the Orchestrator ensures smooth flow, the Realtor Agent builds a property profile, and the Secretary Agent prepares follow-up actions—all coordinated through shared session state.

Agent Data Foundation

While the system architecture above shows how the agents communicate, the interactive database manager below shows what data they actually work with. I’ve built comprehensive mock databases that will power the first Bland AI demo—property listings with detailed specifications, real-time agent calendar availability, local market trends, lead history with scoring, and response templates for consistent communication. This data foundation ensures the agents can provide intelligent, contextual responses during live conversations.

Each database serves a specific agent function: the Realtor Agent queries property inventory and market data to match caller requirements, the Secretary Agent checks calendar availability for scheduling showings, and the Orchestrator accesses lead history to understand caller context and select appropriate response templates. This structured data approach ensures consistent, professional interactions while maintaining the flexibility for natural conversation flow.

When deployed in production, this system would integrate directly with existing realtor infrastructure—pulling property listings from their public website or MLS feeds, connecting to scheduling platforms like Calendly or Cal.com, and accessing any proprietary data the realtor provides through standard APIs. The Gemini Live API handles all voice processing while Twilio manages call routing, creating a streamlined integration path. These public-facing integrations require no special privacy mechanisms since the data is already publicly available. However, sensitive components like lead scoring algorithms, lead storage, and proprietary market analysis would be securely stored in encrypted cloud databases with proper access controls and compliance measures to protect client information and business intelligence.

Code Implementation Progress

With the simplified architecture designed, I’m now actively implementing the Google ADK multi-agent system that integrates directly with Gemini Live API and Twilio. The current development focuses on building three core components: the Twilio integration layer that handles voice calls and connects to Gemini Live API, the Google ADK agents (Orchestrator, Realtor Agent, and Secretary Agent) that process conversation data and make intelligent decisions in real-time, and the integration layer that connects to external services like Google Calendar and property databases.

The implementation leverages Google Cloud Run for serverless deployment, Firestore for real-time state management between agents, and direct API connections for reliable communication. The system is designed to process natural language conversations through Gemini Live API while the multi-agent system coordinates business logic, lead qualification, and follow-up actions simultaneously. This creates a seamless experience where callers interact with what feels like a knowledgeable human assistant, while sophisticated AI agents work behind the scenes to qualify leads and coordinate follow-up actions.

Test-Driven Development Approach

Before writing the production code, I implemented a comprehensive test suite that validates every aspect of the system’s intended behavior. This test-first approach ensures the multi-agent system performs reliably under real-world conditions and meets all business requirements from day one.

Core Functionality Testing - Validates all webhook endpoints, lead qualification scenarios, and agent coordination:

Run python -m pytest tests/test_scenarios.py -v ============================= test session starts ============================== platform linux -- Python 3.11.13, pytest-8.4.0, pluggy-1.6.0 -- /opt/hostedtoolcache/Python/3.11.13/x64/bin/python cachedir: .pytest_cache rootdir: /home/runner/work/voice-ai/voice-ai plugins: anyio-4.9.0, cov-6.2.0, mock-3.14.1 collecting ... collected 12 items tests/test_scenarios.py::test_health_endpoint PASSED [ 8%] tests/test_scenarios.py::test_webhook_validation PASSED [ 16%] tests/test_scenarios.py::test_hot_lead_scenario PASSED [ 25%] tests/test_scenarios.py::test_warm_lead_scenario PASSED [ 33%] tests/test_scenarios.py::test_cold_lead_scenario PASSED [ 41%] tests/test_scenarios.py::test_scheduling_scenario PASSED [ 50%] tests/test_scenarios.py::test_lead_scoring_algorithm PASSED [ 58%] tests/test_scenarios.py::test_property_search PASSED [ 66%] tests/test_scenarios.py::test_availability_check PASSED [ 75%] tests/test_scenarios.py::test_conversation_analysis PASSED [ 83%] tests/test_scenarios.py::test_test_endpoint PASSED [ 91%] tests/test_scenarios.py::test_error_handling PASSED [100%] ============================== 12 passed in 3.51s ==============================

Agent Intelligence Evaluation - Tests the quality and accuracy of multi-agent decision-making and response generation:

Run python -m pytest tests/test_evaluation.py -v ============================= test session starts ============================== platform linux -- Python 3.11.13, pytest-8.4.0, pluggy-1.6.0 -- /opt/hostedtoolcache/Python/3.11.13/x64/bin/python cachedir: .pytest_cache rootdir: /home/runner/work/voice-ai/voice-ai plugins: anyio-4.9.0, cov-6.2.0, mock-3.14.1 collecting ... collected 13 items tests/test_evaluation.py::TestAgentTrajectoryEvaluation::test_property_search_trajectory PASSED [ 7%] tests/test_evaluation.py::TestAgentTrajectoryEvaluation::test_scheduling_trajectory PASSED [ 15%] tests/test_evaluation.py::TestAgentTrajectoryEvaluation::test_lead_qualification_trajectory PASSED [ 23%] tests/test_evaluation.py::TestResponseQualityEvaluation::test_hot_lead_response_quality PASSED [ 30%] tests/test_evaluation.py::TestResponseQualityEvaluation::test_cold_lead_response_quality PASSED [ 38%] tests/test_evaluation.py::TestResponseQualityEvaluation::test_scheduling_response_quality PASSED [ 46%] tests/test_evaluation.py::TestEvaluationMetrics::test_tool_trajectory_scoring PASSED [ 53%] tests/test_evaluation.py::TestEvaluationMetrics::test_response_match_scoring PASSED [ 61%] tests/test_evaluation.py::TestEvaluationMetrics::test_evaluation_criteria_thresholds PASSED [ 69%] tests/test_evaluation.py::TestMultiTurnEvaluation::test_progressive_engagement_evaluation PASSED [ 76%] tests/test_evaluation.py::TestMultiTurnEvaluation::test_conversation_context_evaluation PASSED [ 84%] tests/test_evaluation.py::TestErrorHandlingEvaluation::test_malformed_data_evaluation PASSED [ 92%] tests/test_evaluation.py::TestErrorHandlingEvaluation::test_missing_data_evaluation PASSED [100%] ============================== 13 passed in 3.45s ==============================

Performance & Scalability Testing - Ensures the system can handle production load with acceptable response times:

Run python -m pytest tests/test_performance.py -v ============================= test session starts ============================== platform linux -- Python 3.11.13, pytest-8.4.0, pluggy-1.6.0 -- /opt/hostedtoolcache/Python/3.11.13/x64/bin/python cachedir: .pytest_cache rootdir: /home/runner/work/voice-ai/voice-ai plugins: anyio-4.9.0, cov-6.2.0, mock-3.14.1 collecting ... collected 11 items tests/test_performance.py::TestResponseTimePerformance::test_webhook_response_time PASSED [ 9%] tests/test_performance.py::TestResponseTimePerformance::test_property_search_performance PASSED [ 18%] tests/test_performance.py::TestResponseTimePerformance::test_lead_scoring_performance PASSED [ 27%] tests/test_performance.py::TestResponseTimePerformance::test_conversation_analysis_performance PASSED [ 36%] tests/test_performance.py::TestThroughputPerformance::test_concurrent_webhook_requests PASSED [ 45%] tests/test_performance.py::TestThroughputPerformance::test_database_query_throughput PASSED [ 54%] tests/test_performance.py::TestThroughputPerformance::test_scoring_throughput PASSED [ 63%] tests/test_performance.py::TestMemoryPerformance::test_memory_usage_stability PASSED [ 72%] tests/test_performance.py::TestMemoryPerformance::test_large_data_handling PASSED [ 81%] tests/test_performance.py::TestMemoryPerformance::test_increasing_load_performance PASSED [ 90%] tests/test_performance.py::TestMemoryPerformance::test_sustained_load_performance PASSED [100%] ============================== 11 passed in 3.62s ==============================

This comprehensive testing approach validates that the system correctly handles all lead qualification scenarios (hot, warm, cold), maintains sub-second response times for webhook calls, and scales reliably under concurrent load. With 36 tests covering functionality, intelligence evaluation, and performance benchmarks, the implementation is built on a solid foundation that ensures production reliability.

Automated CI/CD Pipeline

The development workflow is structured around automated testing and deployment to ensure code quality and seamless production releases. The CI/CD pipeline is designed to catch issues early and automate the path from code commit to production deployment.

Current Pipeline Structure:

- Code Push Trigger - Any push to the repository automatically triggers the CI pipeline

- Automated Testing - All 36 tests (scenarios, evaluation, and performance) run in parallel

- Container Build - Upon test success, the system builds a production Docker image

- Deployment Gate - Manual approval required before deploying to Google Cloud Platform

- GCP Deployment - Automated deployment to Cloud Run with proper environment configuration

Current Status: The automated testing and container build phases are fully operational. The pipeline successfully validates code quality and builds deployment-ready images. However, the final GCP deployment automation is not yet implemented—we’re currently at the integration testing phase, validating that all components work together correctly before setting up the production deployment automation.

This approach ensures that only thoroughly tested, validated code reaches production while maintaining the flexibility to manually oversee the deployment process during the initial rollout phase.

Google ADK Multi-Agent Implementation

The core of the system is built using Google’s Agent Development Kit (ADK), which provides the framework for orchestrating multiple specialized agents. The development approach focused on iterative refinement to ensure reliable agent coordination and high-quality responses.

Development Process:

The implementation began with building the Orchestration Agent and defining all the tools it would need to coordinate the other agents. This foundational approach ensured that the system architecture could handle the complex multi-agent workflows before adding specialized functionality.

Initial Validation: I tested several happy paths for both user inquiries and realtor scenarios to verify the basic orchestration flow worked correctly. After a few iterations of prompt refinement and tool documentation improvements, the core coordination patterns were solid.

Agent Development Pattern: Once the orchestration foundation was established, I implemented each specialized agent (Realtor Agent, Secretary Agent) using a systematic approach:

- Test a Path - Run the agent through a specific scenario

- Save Evaluation - Document the agent’s performance and decision quality

- Iterate on Evaluation - Improve prompts based on performance gaps

- Test Path - Validate improvements with the same scenario

- Evaluate → Tweak → Evaluate - Continue refinement until performance meets standards

This iterative approach ensured each agent not only functioned correctly but provided intelligent, contextually appropriate responses that would translate into natural conversation flow during live calls.

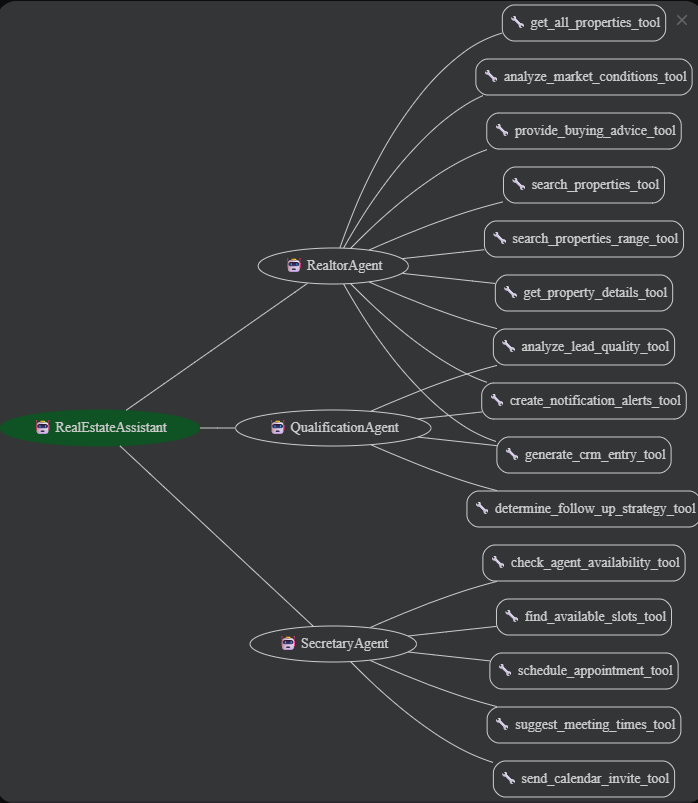

Agent Architecture Overview:

The diagram below shows how the multi-agent system is structured, with the RealEstateAssistant orchestrating three specialized agents—each with their own set of tools and responsibilities. This architecture ensures that every aspect of lead qualification and follow-up is handled by the most appropriate agent.

Happy Path Demonstrations:

The following videos show two different conversation flows that demonstrate the multi-agent system working together seamlessly. These represent the core scenarios the system handles—buyer inquiries and seller consultations—with the agents coordinating behind the scenes to provide intelligent responses and appropriate follow-up actions.

These demonstrations show the system successfully handling complex real estate scenarios with appropriate lead qualification, property matching, and follow-up coordination—all powered by the multi-agent architecture working in harmony.

The Economics That Make It Work

The numbers tell a compelling story. At 25 calls per day (a realistic volume for an active real estate business), the system processes 750 calls monthly with a 20% qualification rate, generating 150 qualified leads. With the direct Gemini Live API + Twilio integration, operational costs are significantly reduced while maintaining sophisticated conversation capabilities—translating to just $0.45 per qualified lead.

Cost Per Lead Reality Check

Compare this to traditional lead generation:

- AI System: $0.45 per qualified lead

- Human call center: $45-85 per qualified lead

- Lead generation services: $50-200 per qualified lead

- Cost reduction: 99% savings over human agents

ROI Scenarios

Even with conservative assumptions, the return is dramatic:

| Scenario | Monthly Leads | Conversion Rate | Deals/Month | Monthly Revenue | Annual ROI |

|---|---|---|---|---|---|

| Conservative | 75 | 2% | 1.5 | $12,000 | 4,400% |

| Realistic | 100 | 3% | 3.0 | $24,000 | 8,900% |

| Optimistic | 125 | 4% | 5.0 | $40,000 | 14,900% |

The Break-Even Math

The system pays for itself with just 1-2 additional qualified leads per month. At typical real estate commission rates ($8,000 average), you need to close one extra deal every 25-50 months to break even. Most agents see ROI within the first month of operation.

Bottom line: For $12-16 per day, you get a system that never sleeps, never misses a call, and consistently qualifies leads at a fraction of traditional costs. The economics are so favorable that the real question isn’t whether you can afford to build this—it’s whether you can afford not to.

Next Steps: Pending Integration

The architecture and economic modeling are complete, but the actual system integration is pending. Once I move forward with building this system, I’ll document:

- Real-world performance metrics and call quality results

- Integration challenges with CRM systems and Google Workspace APIs

- Lessons learned from multi-agent coordination in production

- Actual ROI data from live lead qualification

The technical approach outlined above represents the planned implementation using Google ADK primitives. The business model calculations are based on current pricing from bland.ai, Google Cloud, and LLM providers.

This post is part of my ongoing exploration of practical AI automation. You can see more projects and experiments on my projects page, or read about my broader automation philosophy in “How Far Can AI Really Take You?”